VTA: Versatile Tensor Accelerator¶

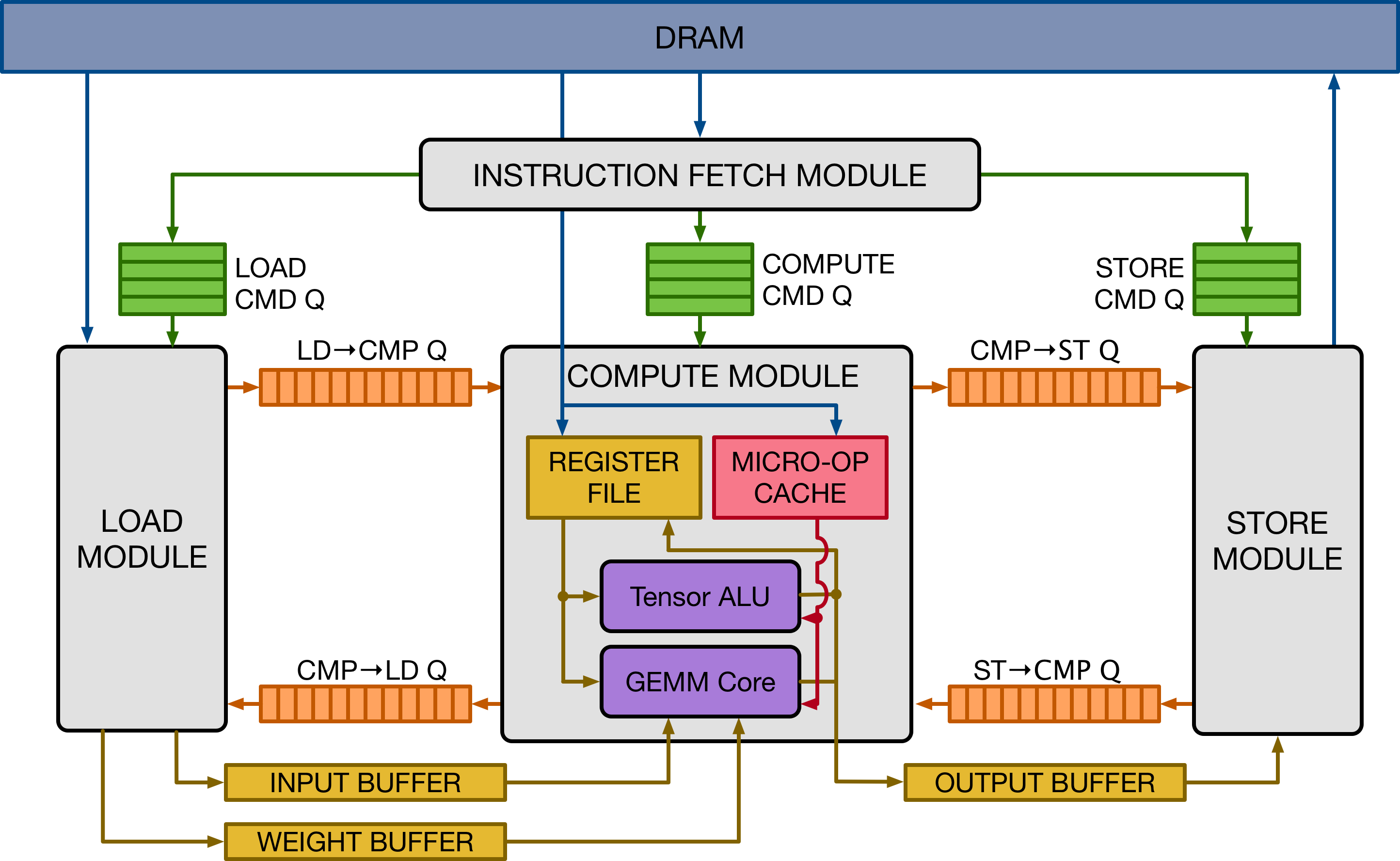

The Versatile Tensor Accelerator (VTA) is an open, generic, and customizable deep learning accelerator with a complete TVM-based compiler stack. We designed VTA to expose the most salient and common characteristics of mainstream deep learning accelerators. Together TVM and VTA form an end-to-end hardware-software deep learning system stack that includes hardware design, drivers, a JIT runtime, and an optimizing compiler stack based on TVM.

VTA has the following key features:

Generic, modular, open-source hardware.

Streamlined workflow to deploy to FPGAs.

Simulator support to prototype compilation passes on regular workstations.

Pynq-based driver and JIT runtime for both simulated and FPGA hardware back-end.

End to end TVM stack integration.

This page contains links to all the resources related to VTA:

Literature¶

Read the VTA release blog post.

Read the VTA tech report: An Open Hardware Software Stack for Deep Learning.